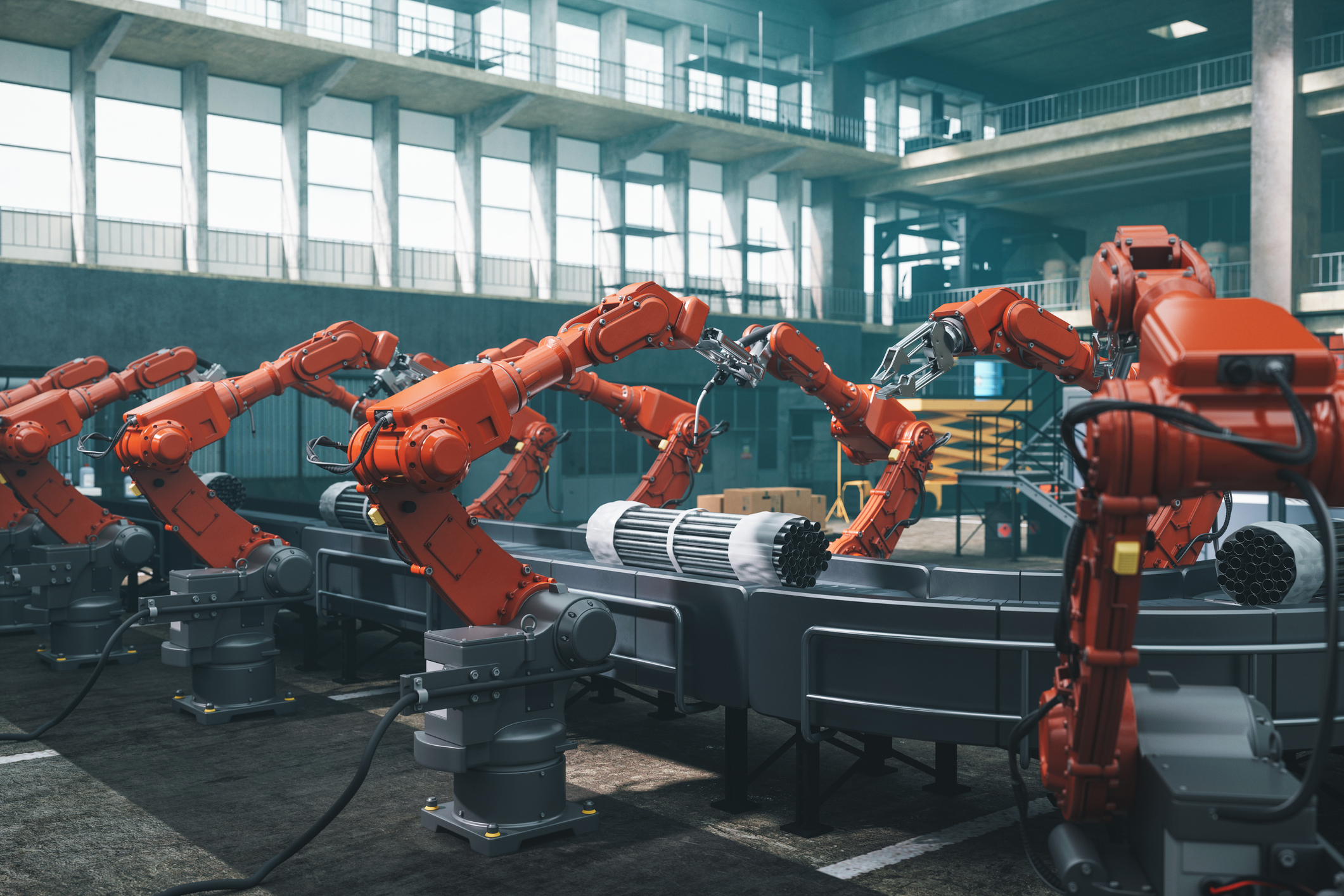

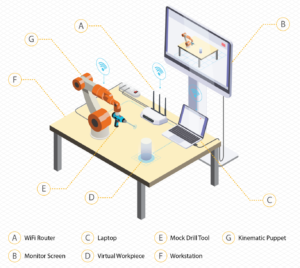

Collaborative robots, commonly referred to as “Cobots,” are among the most groundbreaking technological advancements of our time. Academics and industry experts firmly believe that Cobots have the potential to revolutionise global manufacturing. A Cobot is a context-aware robot equipped with artificial intelligence and vision capabilities, enabling it to safely coexist with both human operators and machines in the same workspace.

The adoption of Cobots in manufacturing is one of the key enablers of Industry 5.0. The concept of Industry 5.0 was first proposed by Michael Rada[i] in 2015, after it was felt that Industry 4.0, the predecessor of Industry 5.0 was unable to meet the increasing demands of personalization and customization of goods. Through incorporation of highly advanced systems such as artificial intelligence, automated systems, internet of things, and cloud computing, Industry 4.0 was aimed at enhancing operational efficiency and productivity by connecting physical and virtual worlds. However, the rapidly evolving global business dynamics shifted the industry paradigm from not just efficient production but also high-value mass customization and personalization of goods. It was widely believed that Industry 4.0 was unable to address these changes. Therefore, Industry 5.0 was coined to address changing industrial dynamics focusing on collaboration between advanced production systems, machines and humans.

To reap the enormous benefits associated with this technology, its adoption necessitates careful consideration of the risks that could potentially affect the well-being of human operators who work collaboratively with Cobots.

Ethical Considerations of Adopting Cobots

Ethical considerations when adopting Cobots encompass a wide range of social factors[ii]. As defined by the British Standards Institution[iii], ethical hazards are any potential source of harm that compromises psychological, societal, and environmental well-being. While collaborative settings involving Cobots offer benefits like reducing physically demanding tasks for humans, they have also brought forth new risks and ethical considerations that demand attention during their planning and use. In following sections, I will discuss some of the ethical considerations of adopting Cobots:

Emotional Stress

Understanding potential worker emotional stress may result in designing better human-Cobot interaction systems that minimise stress and enhance the overall user experience. Cobots may cause emotional stress among users for several reasons. For instance, users might feel they have less control over their work environment when Cobots are involved, especially if the Cobots operate autonomously. This can lead to feelings of anxiety and stress. Moreover, Cobots are often used for tasks that require high precision and concentration, thus pressure to perform these tasks accurately can be mentally exhausting and stressful. The constant need to monitor and interact with Cobots can trigger physiological stress responses, such as increased heart rate and tension. Organisations can consider these factors when designing and implementing cobots.

Social Environment

Understanding potential social environment related disruptions, manufacturers can develop strategies to mitigate workers’ concerns and create a harmonious work environment. Unless workers are involved in the design and planning of Cobot implementations, they may disrupt the social harmony of the workplace in several ways, for example by raising concerns about job security among workers, or causing anxiety and tension due to the fear of being replaced by robots. This can lead to confusion and ambiguity about job roles, causing stress and disrupting team cohesion. Furthermore, the presence of Cobots can alter social interactions in the workplace, with some workers viewing them as teammates while others see them as intruders, potentially leading to conflicts. Additionally, the increasing autonomy of Cobots raises ethical questions about decision-making and accountability.

Social Acceptance

By comprehending social acceptance related community factors, strategies can be developed to enhance the acceptance of Cobots. Communities play a crucial role in determining the acceptance of new technologies. Several key factors influence the acceptance of Cobots. Different cultures exhibit varying levels of comfort and acceptance towards technology. Some cultures place a higher level of trust and enthusiasm for technological advancements, which can lead to greater acceptance of Cobots. The opinions and behaviours of peers, family, and colleagues can significantly impact an individual’s acceptance of Cobots. Communities with higher levels of education and awareness about the benefits and functionalities of Cobots tend to accept them more readily. Government policies and incentives that promote the use of Cobots can positively influence community acceptance. Supportive regulations and funding for Cobot integration can encourage businesses and individuals to adopt this technology.

Data Collection

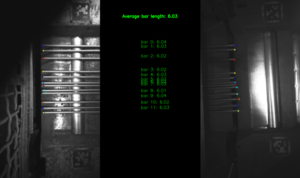

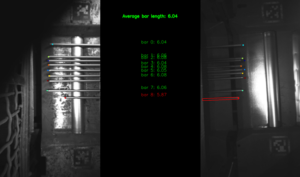

Firms adopting Cobots need to devise their data management policies and ensure workers that collected data will not be used by any other third party. Considering that Cobots collect a variety of data from their safety systems, there’s a risk that operators and user data could be collected, used, and sold without consent. Research indicates that many industry organisations were already interested in the potential value of this data in developing future products and services.

The addressal of these ethical considerations can ensure that the adoption of Cobots contributes positively to society and aligns with our social values. Thus, by prioritizing ethics, we can foster trust and acceptance of Cobots in manufacturing.

[i] https://www.linkedin.com/pulse/industry-50-from-virtual-physical-michael-rada/

[ii] https://www.centreforwhs.nsw.gov.au/__data/assets/pdf_file/0019/1128133/Work-health-and-safety-risks-and-harms-of-cobots.pdf

[iii] https://knowledge.bsigroup.com/products/robots-and-robotic-devices-guide-to-the-ethical-design-and-application-of-robots-and-robotic-systems